The start of a new year provides an excellent opportunity to take stock and think about what comes next. In this post, I will quickly look back at what the OpenDialog team was up to in 2022 and what is coming next for the OpenDialog platform.

If you are in a rush, here is the summary: 2022 was fun, creative and intense. We focused on making the platform robust and enterprise-ready deploying real-world solutions in close collaboration with our clients. In 2023 we will focus on delivering the most challenging part of our vision in a Conversational AI landscape that is getting ever more exciting (did someone mention ChatGPT

May 2022 was the first time we raised external funding (a $5m seed round). June 2022 was the first time we got dedicated office space (and we met again there

The Platform

For the platform, it was a year of learning, consolidation and incremental improvements. The focus was less on the new and shiny, although there was a good dose of that, and more on ensuring the platform provides a solid, secure, scalable solution for our clients.

Rather than list every new feature or idea, let me share with you our view of the OpenDialog platform and how we divide capabilities across stages of the Conversational AI application journey.

At the core, we have the OpenDialog Conversation Engine. The engine manages and executes conversational applications described using the OpenDialog Conversation Description Language. This declarative language enables us to describe a wide range of conversational scenarios at varying degrees of specificity and reason about them through our context-first, multi-agent view of interactions.

The OpenDialog platform wraps around the Conversation Engine to provide the tools that a conversation designer and the rest of the team need to deploy a solution. We start with Conversation Design, followed by Natural Language Understanding support, move to Channel and External System Integration and finally, Analytics, Testing and Improvement. The last step naturally leads us back to conversation design as we continue to develop the application, and on the cycle goes.

The work with clients in 2022 has been gratifying as we saw the OpenDialog approach deliver on the initial goals of making it possible to build sophisticated conversational applications that can scale and adapt to changing needs through a no-code interface.

OpenDialog in 2023

For 2023 there is already much planned to improve each aspect of the platform, but there are also some bold new goals to achieve. I’ll focus on those here.

First, let me re-iterate OpenDialog’s product hypothesis:

“The task of developing and deploying conversational applications requires the balanced integration of human-led conversation design with machine-learning prediction and explicit knowledge representation reasoning. The Conversational AI platform should work with the designer to co-create solutions, hiding underlying complexity while providing clarity of outcomes.“

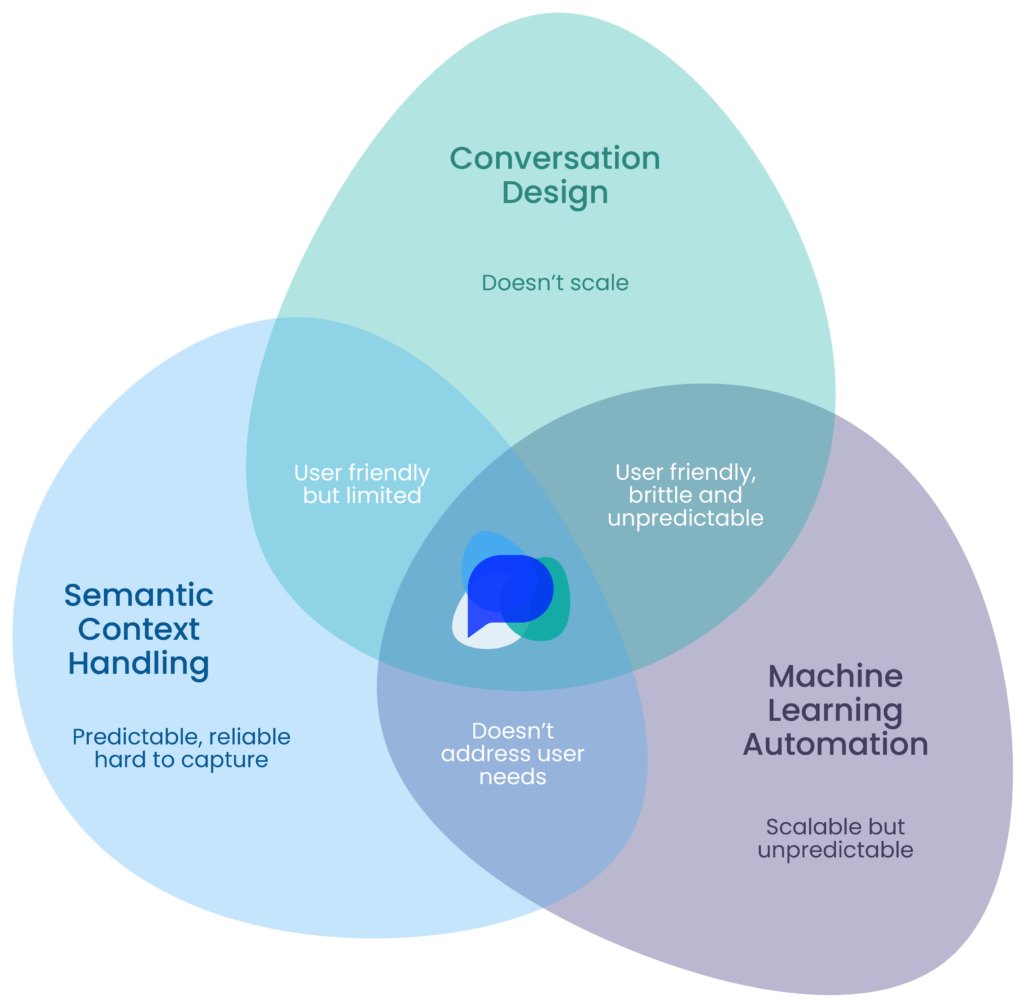

That is a mouthful, but essentially it means that to solve a real-world problem, we can’t just design it all “by hand,” and we also can’t have it all solely guided by data. There is a sweet spot where human design meets machine learning and explicit semantic reasoning. Making that sweet spot accessible to humans in a manageable way is a challenge. There are so many moving parts and possibilities that the ability to divide and conquer, encapsulate and abstract is crucial.

Finding the sweet spot between machine learning, explicit reasoning and conversation design.

The OpenDialog model, with its focus on context and its use of multi-agent ideas, makes it possible to do precisely that. However, so far, the platform has depended disproportionately on the conversation designer to define the elements of the solution. The platform then facilitates and reasons over them, but it has not been actively participating in the design process. The aim is to free up the designer to create more delightful experiences and enable them to place more trust in the platform to deal with the details.

The focus of 2023 will be to make the OpenDialog platform an actively proactive participant in the design process.

Platform and Designer work together to design and improve.

There are a few aspects to this which we discuss here.

Double down on conversational patterns

Working with clients has made it clear that conversational patterns are vital for conversation designers. Patterns enable us to generalize and describe broader types of interactions succinctly. Understanding where those patterns occur and designing your application to support them leads to more robust, more understandable and more scalable solutions.

In 2022 we did some great work to document these patterns. We now have enough real-world usage data to determine which patterns to support explicitly within the platform. The designer will get 1-click access to them, and the engine will directly reason over them, speeding up the entire process.

To be clear, this is not about re-using templates for complete solutions (a feature that OpenDialog already has) or just about pre-built pieces you can copy and paste into your solution. I am referring to deeply embedding patterns as part of the conversation engine’s contextual reasoning and proactively helping a designer understand when a specific pattern would suit their application.

A more fluent conversation language

In addition to conversational patterns, there is a range of other capabilities that we’ve used in client projects that are worth bringing into the conversation engine to provide 1-click access to them.

There are too many ideas to list, but here are two specific examples:

- Conversational milestones: Often, it is useful to describe a particular step as a milestone or key goal of the conversation, an interaction that is more important than anything else. Having that information can help us reason about the dialogue in a more nuanced way, and it can also provide rich analytic information.

- Interpretation strategies: Interpreting what a user says is a multifaceted task. There are at least three components to it.

- What we think they’ve said linguistically.

- What does that mean in the context of a specific domain?

- What does that mean in the context of a specific conversation within a specific domain?

More often than not there are explicit reasoning capabilities that we can bring to bear to mediate between these different views and different NLU capabilities can address different parts of these views. Empowering the conversation designer to choose different interpretation strategies based on their specific problem can be the critical element that raises the quality of the entire solution.

Embed Generative AI in all steps of the process

Anyone involved with Conversational AI has spent time thinking about how Large Language Models (LLMs) and tools like ChatGPT will influence the space. We can take several different perspectives on this, and we will talk more about it in the future.

However, what is already clear is that we can embed LLMs in the design process to speed up what the conversation designer can do. Think of LLMs as “language calculators”, a handy companion to you as a conversation designer that makes it possible to spend more time thinking about the big picture and have an LLM generate or transform the smaller pieces of data.

Some of the things we are working on:

- generate an alternative version of a bot message to fit a different writing style or bot persona

- generate an alternative version of a message to fit the user’s context

- generate training data for intents or entity phrases

- generate suggestions for intents

- converse with our virtual assistants to test them and test the edges of their capabilities

There is a lot more that we will be sharing in due course that is even more exciting, but these initial changes will improve the design experience significantly.

Training

Finally, an initiative that I am particularly excited about is the investment we are making in creating great training material and a training platform for OpenDialog. At OpenDialog we always stress that the ideas underpinning our approach are core to what we do and how we understand the platform. We have strong opinions about what Conversational Applications are and what the job of designing such applications entails. As such, it is crucial that we provide training material that clearly explains all of this and makes it easy for both new and season conversation designers to get started.

We will be releasing our early this year and sharing more then.

Conclusions

As we work on our roadmap and goal to make OpenDialog a complete and proactive platform that co-designs conversational applications, it is also worth sharing a couple of thoughts on where we see the broader Conversational AI space.

Conversational AI is in a really interesting place. On the one hand, the support for enterprises to deliver this capability at scale is maturing. We are past the hesitations of earlier years, the hype and subsequent disappointment that surrounded the space. The question is no longer whether Conversational AI should be considered but when and how the journey should start. On the other hand, Conversational AI technologies are moving at breakneck speed. There is something new and shiny every week it seems like, and the pace is picking up.

That is something that at OpenDialog we always expected (allow me this self-indulgent moment) and why we explicitly focused on how we can define a core model and fundamentals that can adapt to change. Our hypothesis, from the get-go, was that language capabilities would keep evolving quickly, and one of the big goals of a Conversational AI platform should be to empower you to incorporate new capabilities without having to do everything from scratch every year. For example, if there is a better Question & Answer technology that you can incorporate you should be able to do that without re-designing the entire application. If you want to upgrade your intent recognition capability, it should drop into your solution without impacting anything else. If you can now automatically generate messages or write the description of the message and have Generative AI do the rest your model and application should support that single change without impacting the rest.

We are excited to see all the new developments from an NLU perspective and are focusing on making them quickly and safely accessible to clients in a way that will work usefully today and remain relevant moving forward.

We are evolving the OpenDialog platform to become a proactive companion to the Conversation Designer. Our users should be able to focus on creating delightful experiences, always having access to the latest capabilities, on a platform that is safe, scalable and can accompany them into the future.