Financial pressures, staff shortages, and a high administrative burden on workers are all issues that affect patient safety and care in the healthcare industry. In recent years, however, advances in technology have made it possible to address some of these roadblocks via automation of processes such as appointment scheduling and management, patient triage, and post-discharge follow-up.

Until now, automation in healthcare primarily relied on Robotic Process Automation (RPA) for streamlining administrative tasks and workflows. However, the industry is now transitioning towards leveraging generative AI technologies to automate conversations through digital assistants. The result? Enhanced patient care and improved operational efficiency.

Most people are familiar with ChatGPT to some degree, and if you’ve ever used the technology, it’s unlikely you trust it implicitly. As generative AI technologies like this are relatively new, naturally many people have concerns about whether it is even safe in the healthcare industry.

So how do you get patients and users to trust digital assistants when it’s used in a healthcare setting? In such a regulated, life-saving sector, healthcare workers need to have complete trust in the technologies they’re using. And rightly so.

In this blog, we look at the risks of generative AI-powered conversations but also how this technology can be safe for healthcare automation. We’ll leave no stone unturned, covering everything from regulatory compliance to AI bias.

How does Generative AI meet compliance requirements?

With rising waiting lists and increasing pressures on the healthcare industry, generative AI automation can be a game-changer, when applied correctly. For instance, a digital assistant that’s available 24/7 can give patients an easy and accessible way of asking for information about an upcoming appointment. Thus, taking the pressure away from administration staff in a hospital and providing a seamless experience for patients.

But what are the risks? Due to the sensitive nature of the industry, healthcare organizations are subject to stringent regulatory requirements to safeguard patients and their information. These barriers can make it tough for healthcare organizations to adopt generative AI technologies, especially as most out-of-the-box solutions don’t adhere to the strict regulations within the industry.

For this technology to meet compliance requirements and be considered safe and trustworthy for use in healthcare, it must adhere to certain criteria like explainability and accuracy. The OpenDialog platform has been built for this exact reason, ensuring that strict guardrails are in place to safeguard against these risks while adhering to specific healthcare regulatory compliance requirements.

Is Generative AI explainable?

You might be wondering, what do we mean by explainability in conversational AI? Well, in its simplest form, explainability refers to the ability to interpret how the AI system makes decisions.

The Generative AI technology that powers ChatGPT is known as a Large Language Model (LLM) because it is trained on large amounts of language data. LLMs, like ChatGPT and others, use this training data to mimic human language patterns and ‘generate’ responses in real-time in such a way that it can feel like a human conversation. However, LLMs lack the control and accuracy of output necessary for regulated industries.

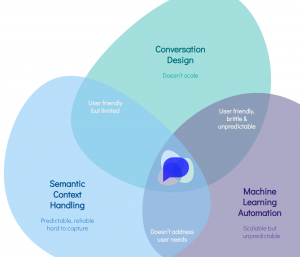

As well as this, despite ongoing efforts to enhance transparency, the black-box nature of LLMs poses the challenge of explaining their decision-making processes. To combat this, a lot of the time conversational generative AI applications are substituted with structured decision trees, to adhere to healthcare industry requirements. This ultimately sacrifices natural dialog for rigid question-and-answer interactions that can often be frustrating for users.

To ensure transparency in conversational AI, it is important to provide clear insight into the algorithms and data sources used, so that industry regulators can comprehend the reasoning behind a system’s decisions.

How can this be achieved? Here at OpenDialog, we offer a solution that safely harnesses generative AI language models to provide a natural conversational experience for patients and users. At the same time, its context-first architecture and safety guardrails work under the hood, analyzing and adding structure to fluid conversations.

This creates an auditable trail and enables full control over how multiple connected knowledge systems and language models are orchestrated, allowing healthcare organizations to harness the full capabilities of generative AI powered automation that is safe for their users and adheres to compliance standards.

Confidence in Generative AI

Before implementing generative and conversational AI technology, healthcare organizations must be confident that digital assistants generate responses that are aligned with specific compliance and safety rules. Therefore, the organization must be able to understand how the AI assistant came to the generated responses.

For example…

If a patient is using an AI assistant to ask questions about their upcoming appointment, the system must first be able to understand what the patient is requesting and then be able to correctly generate an accurate and explainable response. If not, patients can be left confused or misinformed, which may result in them coming to appointments unprepared or at the wrong location, with no way of knowing what went wrong.

That’s why it’s imperative that healthcare organizations don’t just blindly adopt technology without ensuring the outputs are explainable and reliable.

The OpenDialog platform uses LLMs where relevant, and combines this with rule-based processes appropriately. This gives organizations the ability to leverage Generative AI to the best of its capacity, all while ensuring their digital assistants are in line with regulatory and patient safety standards.

In addition, OpenDialog provides business-level event tracking and process choice explanation, giving our healthcare clients a clear audit path into what decisions were made at each step of the conversation their end-users have with their AI assistant or chatbot.

Ensuring that conversational AI systems are designed to provide explanations for their outputs is essential. This fosters trust among users and helps healthcare organizations comply with regulatory requirements. The European Parliament’s AI Act reinforces a commitment to ethical principles such as transparency, security, and justice.

Is Generative AI accurate?

In healthcare, we strive for 100% accuracy in order to deliver effective and fair care. This is no different when it comes to new technologies like generative AI.

Leveraging this technology in healthcare has proven benefits to operational efficiency and improving experience for patients. However, the accuracy of this technology hinges on the robust training data of the AI. It is also essential to update and maintain the system regularly to address evolving challenges and improve accuracy over time.

OpenDialog acts as the safety layer between the user and the connected language models and data sources, therefore reducing risk along the way. OpenDialog is uniquely built to reason over user input, incorporating conversation and business context before deciding whether to use a generated or a pre-approved response.

What are the privacy concerns around Generative AI in healthcare?

While generative AI technologies can have many potential benefits, as outlined in this blog, they also pose risks for those adopting the technology and its users. Regulatory environments like healthcare make adoption particularly challenging as organizations are handling extremely sensitive information and data.

These risks include; data accuracy, where LLMs are prone to hallucinations and generate inaccurate responses, and data privacy risks, as AI models require user input as part of training, posing a risk to unencrypted data. Both of these risks underscore the need for AI systems that are built for regulatory requirements, like the OpenDialog platform.

How to avoid bias

Addressing bias in machine learning is a significant challenge. Biases in data can lead to discriminatory outcomes, impacting patient care. AI systems can perpetuate existing biases that influence resource allocation and opportunities. Developers must actively work to identify and rectify biases in both training data and algorithms.

Regular audits and diversity in data collection can contribute to minimizing bias and ensuring fair and equitable use of generative and conversational AI in healthcare settings.

In fact, there’s great potential for equality in AI, as over 60% of business owners believe AI can lead to more equitable societies, and 51% of adults in the US think AI would reduce bias in health and medicine. When done correctly, conversational AI can unlock both accessibility and equity.

In addition, avoiding bias in the design of your Conversational AI solution is equally important. Be it in the choice of the tone of voice, level of understanding, use or not of jargon, adaptability of the interface to different audiences, or something as simple as the avatar you choose to represent the organization (if there is one).

It is a vast subject but the highlight is to leverage user-centric conversation design to complement AI models and make conscious decisions about eliminating bias.

Safely Using Generative AI in Healthcare

The use of conversational automation powered by generative AI in the healthcare industry presents both opportunities and challenges. By prioritizing transparency, compliance, accuracy, and bias mitigation, healthcare professionals can work alongside AI to improve patient care while guaranteeing their safety.

Learn more about how conversational AI is changing healthcare.